Migrating Photoworks to Vercel AI Gateway

Photoworks: A labour of love for the synergy of art, photography and technology

I love photography, art, I've worked 10 years in the TV Industry. So I've always had a passion for the making of images; lighting, colour theory, portrait photography. Therefore of all the uses of LLMs it was stable diffusion and PYTorch which got me really excited. Watching the first images emerge from random pixels in Foocus felt like pure magic.

As a technologist I loved diving into the computer science of how this works. Stable diffusion starts at these random pixels and uses a models training weights to gradually change these to converge on its calculated value of "correctness". For me the next stage in this journey was to create my own training weights from my own images to allow SD to create images with the likenesses of the people I know and love with endless possibilities for creativity.

At the time my wife and I were living at her family home in Puerto Rico. She needed professional profile pictures for work; a department store, stylist and photographer weren't readily available down the road. This felt like the first real use case. We were able to create images truly of her; trained on 20+ random smartphone pictures; but with perfect business attire, lighting etc.

This technology was significant enough that I didn't want to just make a hobby project. As an engineer I wanted to learn the craft of making this into a professional product that professionals could rely on and pay to use. This is the difference between "coding" and the craft of engineering.

Running this on a professional scale and not just a local graphics card meant utilising professional grade Nvidia H100/200 chips; the type hoarded in data-centres by the leading tech companies.

The return of servers

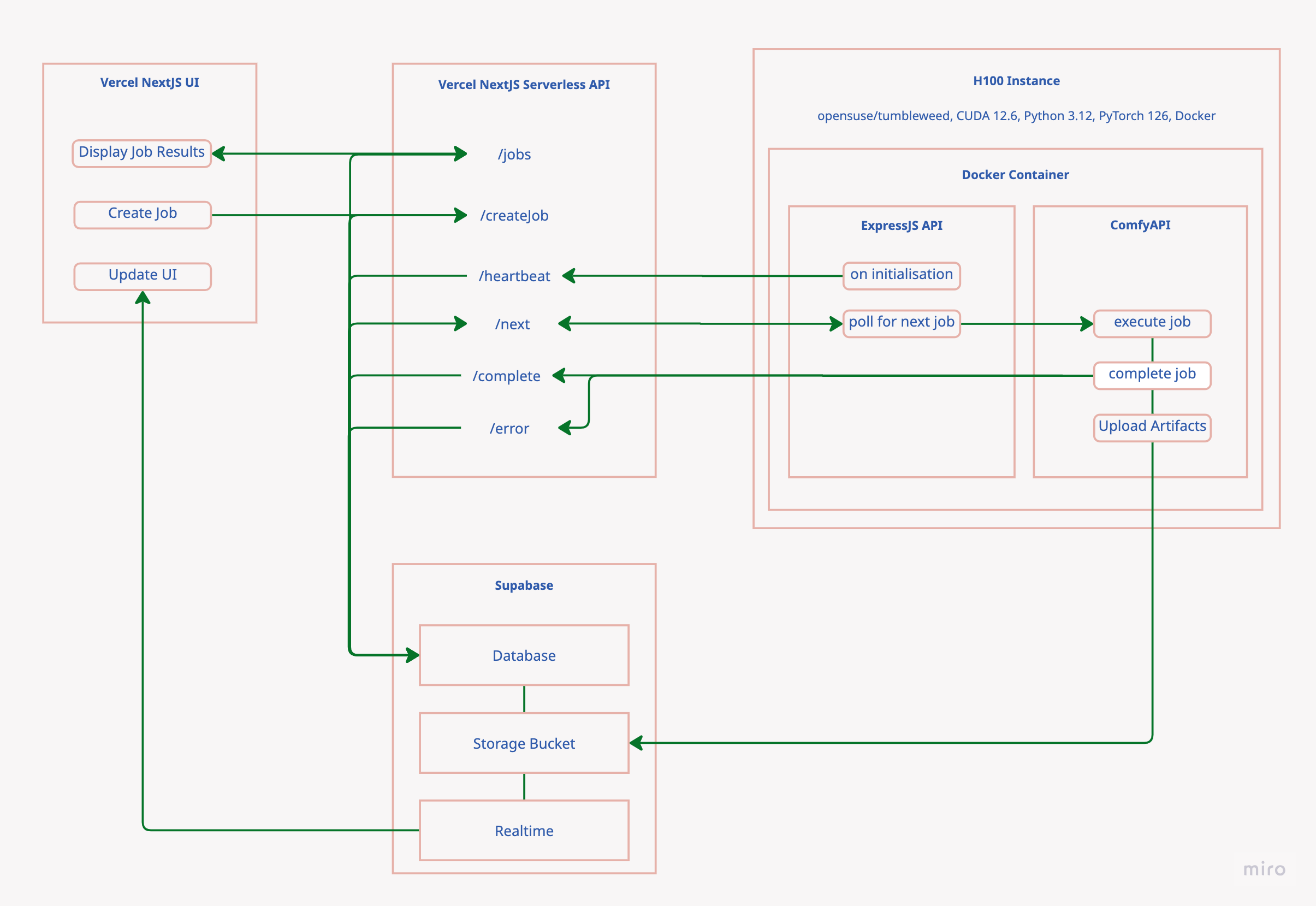

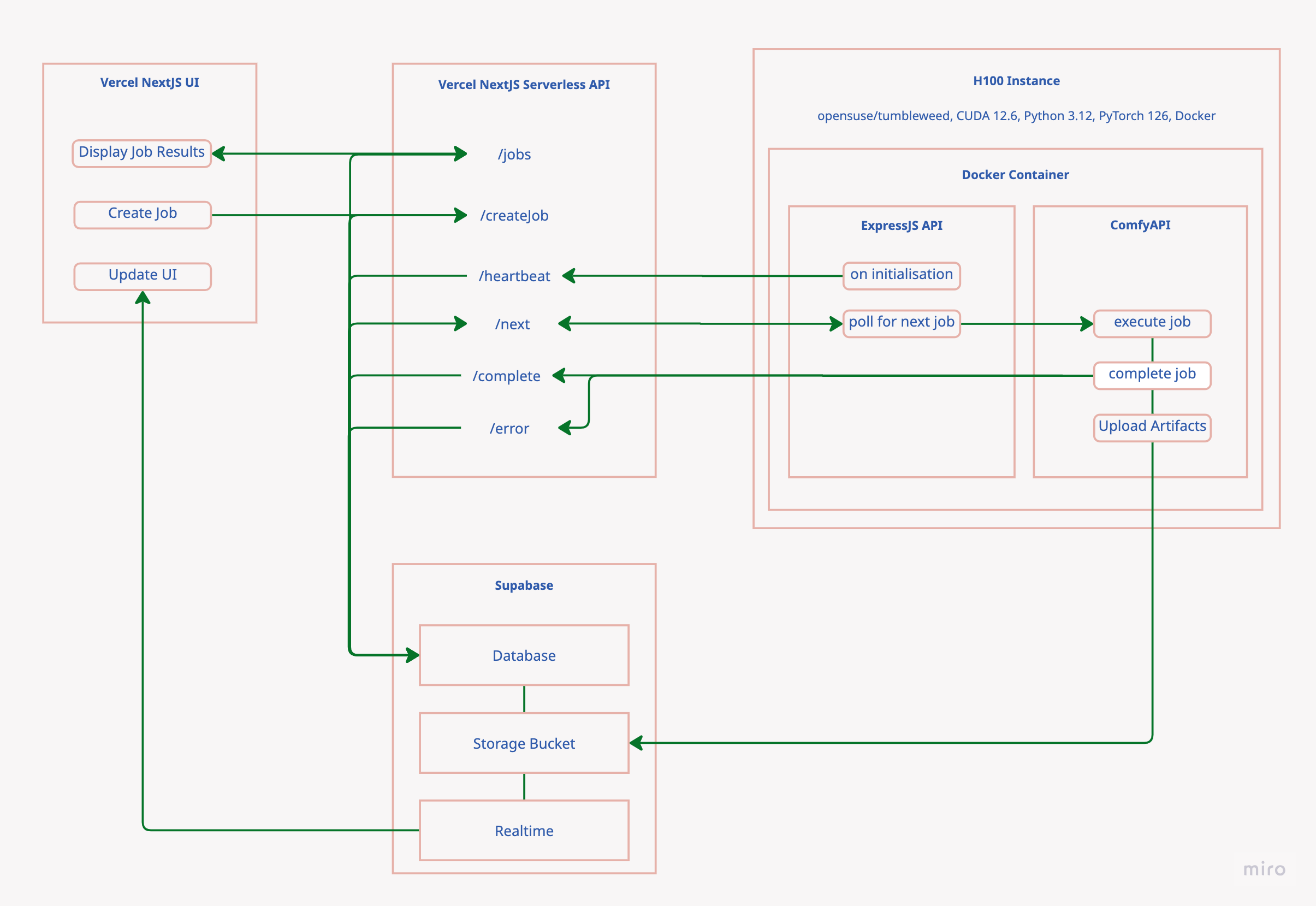

After a mind-set migration to server-less architecture in recent years this challenge has needed an interesting return to managing servers. The core of Photoworks is a Dockerised worker. This is based on a CUDA compliant Linux base image; with PY Torch handling the inference and training tasks. The workflows are designed and tested in ComfyUI.

The photoworks worker hosts a simple Node Express API. After initialisation it makes regular heartbeat calls to the central NextJS API; with its busy/idle/error state. This is used by the central API to auto-scale according to capacity needed. When idle; the worker also regularly calls the central /next API to ask for its next job. At the end of a job workflow the worker uploads its resulting asset to a Supabase bucket and calls /complete on the central API.

Autoscaling with Terraform IAC

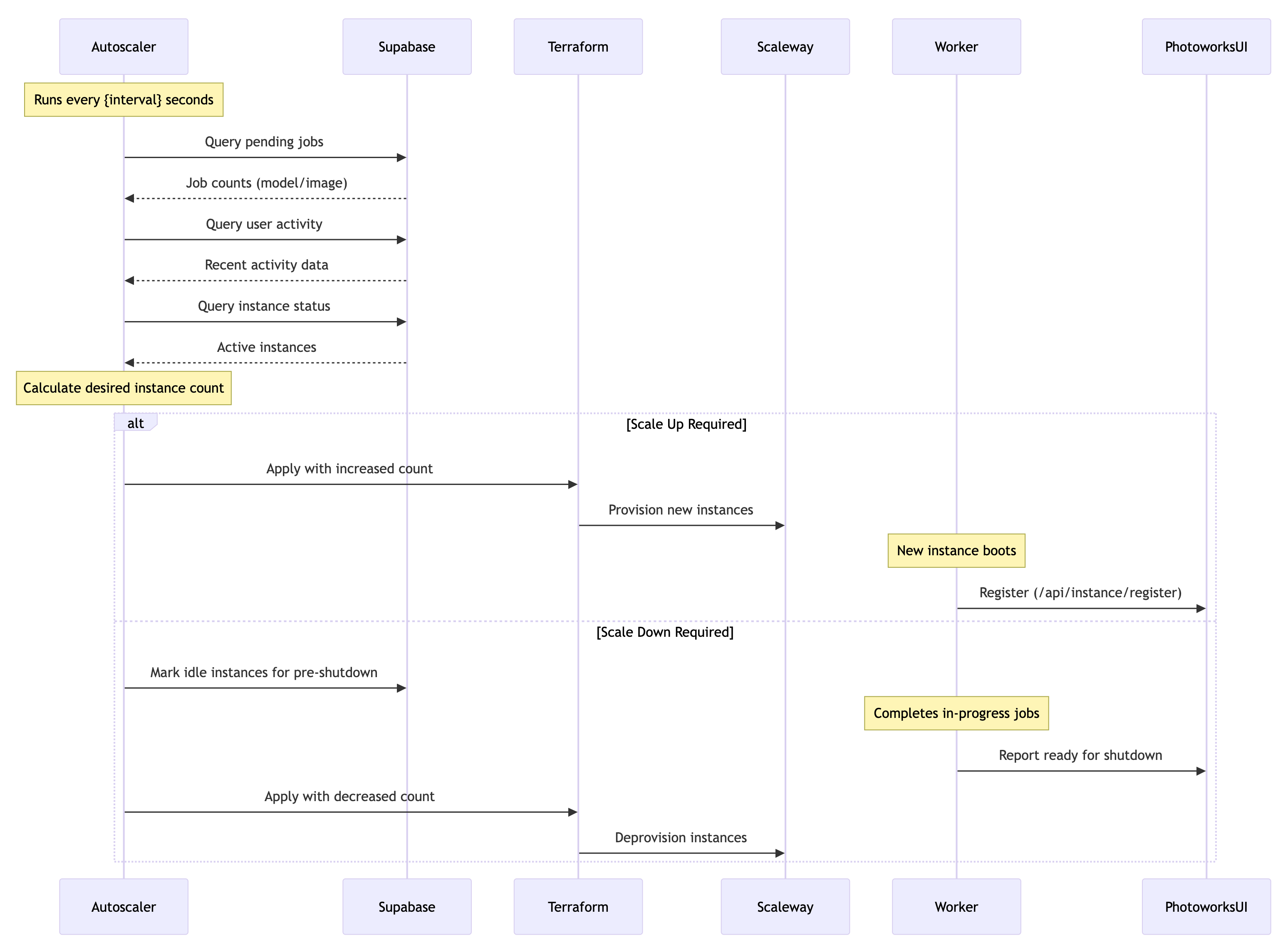

These workers are hosted on pay-on-demand servers with NVIDIA H100 GPUs. The auto-scale application runs a constant loop; assessing the length of the job-queue vs the availability of active workers. It uses Terraform to scale up or down the servers needed. Terraform is an infrastructure as code platform; that specifies the configuration of cloud computing environments with configuration files; when Terraform detects a change in the specification files; it executes the change in provisioning against the chosen cloud provider (AWS/Scaleway/Koyeb etc).

Photoworks worker code is deployed with Github pipelines. Changes to the main-branch are detected by Github; a new docker-image is built and deployed to the docker-hub registry. Once tested ready for production; the terraform files are updated with the new image ID for future deployments.

Tradeoffs of a real business

After continuous improvement and hardening from real-world usage; this machine is working very well. It's satisfying to have ownership of all the moving parts. However there's still a difficult trade-off. Even with fine-tuning; it still takes approx 2-3 minutes for a worker to start; initialise and be ready to execute Jobs. For someone wanting an image or a trained model; this is an unacceptable wait. Therefore a pool of workers is needed to be kept "warm" at all times to avoid this cold start. In terms of the economics; the execution time for a job is fast i.e. cheap; but this idle time is the main expense of the business.

Evolving USPs

I still believe that there's USP's available in text-to-image/video inference for specialised use-cases. The initial USP was simply to be an early adopter of the technology. Photoworks went beyond Grok and OpenAI by training a high-quality model of the subject upfront; used as weights for inference specifically about that subject. I believe there's still a market for "pro" alternatives to the mainstream text-to-image; however the niches lie in integrations with specific businesses and platforms.

No place for sentimentality

To be successful in technology you need to have a thirst for progress and not be sentimental. In these last couple of years technology has been adjusting to this new paradigm of AI inference. Platforms have evolved to support the computing needs provided by the self-rolled system that I've described above.

A better platform

Recently launched Vercel AI gateway provides a standardised interface with LLM inference platforms; integrated deeply with the Vercel/NextJS application stack. FAL is a service optimised for image/video inference on demand. Backed by the economies of scale of having enormous GPU compute capacity.

For Photoworks it makes sense to specialise resources on the IP of the specialised USPs going forward. Therefore this blog will document the transition to using Vercel AI Gateway, which offers a more efficient and scalable solution for our needs. By leveraging Vercel's infrastructure, we can reduce the cold start time and optimize resource usage, ultimately improving the user experience and cost-effectiveness of our platform.

A flexible platform to support a years worth of training data. A graceful evolution

As Photoworks has been running for over a year; my customers and I have used the platform to create personalised "Loras"; the trained weights based on their uploaded data. As the USP is professional photographer rivalling pictures with perfect likeness and flexibility; the training workflow runs 2000 steps; that takes 20mins. It's important that the migrated platform can support this existing Lora training data; and therefore the Flux model. The default AI Gateway text-to-image model is Googles Nano Banana. This is excellent at editing photos and mashing them together. This will be the model of choice when Photoworks branches out into generating Shopify integrated e-commerce imagery. However for the pro-photography use-case; it's imperative to continue to support FLUX. AI Gateway has the flexibility to support multiple platforms. As mentioned FAL is closen as it's created an inference engine like my own; at a massive scale; but also supports a vast range of models; with fine-grain control of needed. It supports custom workflows and has a template supporting Flux+Lora.

The rest of Photoworks infrastructure can remain the same. This is the account management, asset storage, realtime-ui, billing etc. The change can be cleanly applied within the NextJS create-job API. The same interface can be maintained between the UI which allows the user's composition of the imaging request with the same parameters. The job orchestration that allows event driven handling of job-complete/fail/user token balance updates etc can be as-is.

Infrastructure As Code to Framework Defined Infrastructure. The benefits of another rung of abstraction

The beauty of the Vercel approach hangs around the framework-defined-infrustructure approach. Anyone who has worked with AWS knows that all the complexity comes up-front. Every aspect of an AWS system needs to defined ahead of deployment to provide the infrusturcture needed to support a system. Vercel supports the same complexity; but the complexity is deployed progressively; with a level of abstraction that allows the underlying complexity to be opaque. Allowing the developer to solve the unique parts of their products. This delivers up-front; but also massively reduces the cost and risk of making the changes and innovation that the ever-evolving digital-product landscape requires. By moving my infrastructure within the Vercel umbrella; I've removed the burden of maintaining this infrastructure myself; I've reduced it to lines of code in my PhotoworksUI repository. The AI SDK pioneered by Vercel means future changes needed by my business are supported by this clear interface. The infrastructure management has become a commodity within the platform; maintained by those specialised in this layer of the abstraction.